I did my DB2 LUW migration to DB2 LUW Version 10 and now it’s time to take advantage of all the great DB2 LUW Version 10 features. The one that is on the top of my list is the new Adaptive Compression. Adaptive Compression is the newer, better DB2 LUW compression. The new Adaptive DB2 LUW Compression is a better compression because it provides even denser compression and is a leading reason why the migration to Version 10 is happening quickly at many companies.

The new DB2 LUW Adaptive Compression combines the old “classic” table level compression with its compression dictionary with an additional DB2 page level compression. The page level compression adapts over time and provides the best compression available based on your data. Adaptive Compression can make a huge difference in your daily processes because it really compresses your data down to only use one-sixth or one-seventh of your original uncompressed storage size. This makes a huge difference in backup time, recovery time and overall application SQL performance because of each database I/O retrieves a greater amount of data. This DB2 LUW compression storage difference can help dramatically reduce your storage expenses and significantly improve your applications so they meet their processing windows.

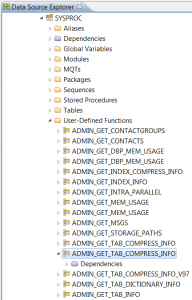

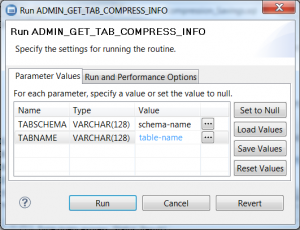

Finding out how much the new DB2 Compression Adaptive Compression could improve your database table storage is easy. First, simply go into Data Studio in the Data Source Explorer and connect to your database. Then expand the database components, expand Schemas and scroll down to SYSPROC and find the ADMIN_GET_TAB_COMPRESS_INFO User-Defined-Function (UDF). Then right-click on it and select RUN from the menu. The prompt for entering the names of your table schema and table will be displayed. After entering your table name criteria the UDF will scan your table and give you information on how much storage and improved I/O performance your table could achieve with the new Adaptive Compression. Make sure to run this analysis at an appropriate low-processing time as it will scan your table and gather many page row details. In analysis of several tables within my environment it saved an average of 75% of the pages and brought row sizes down from over 300 bytes to only over 50 bytes saving a huge amount of storage and improving my I/O tremendously.

What is great about the new DB2 LUW Adaptive Compression is that it’s easy to implement. If you don’t have compression implemented or have the old “classic” compression implemented already, an easy ALTER TABLE creator.table_name COMPRESS YES ADAPTIVE; will enable the new Adaptive Compression for your table. As your table is updated and rows inserted Adaptive Compression will compress your table data pages. If you have the opportunity to reorganize the table, you can immediately get all the new compression storage savings and I/O improvements.

DB2 LUW compression and Adaptive Compression are available for an additional DB2 licensing fee and is well worth the extra license cost if you have a high performance database or data warehouse. Also, your IBM DB2 support team is ready to help you trial the feature in your environment so give them an email or a call today to improve your overall performance and dramatically reduce your storage costs. Use the DB2 command: DB2licm –l show detail to see whether you already have this DB2 Storage Optimization feature available in your environment. Through this new DB2 LUW Adaptive Compression, your DB2 environment can get huge storage savings, daily application performance improvement and reduce daily backup time.

__________________________________________________

On December 4th, 5th and 6th I will be presenting at the Minneapolis, Milwaukee and Chicago DB2 Users groups doing my two latest presentations detailed below. Hopefully you can come out to the meetings and we can have a good questions and answers session about all the big data and Java applications your company is doing.

Agile Big Data Analytics: Implementing a 22 Billion Row Data

This presentation discusses the design, architecture, meta-data, performance and other experiences building a big data and analytics DW system. You will learn through this presentation the real life issues, agile development considerations, and solutions for building a data warehouse of 22+ billion rows in six months. This presentation will help you understand techniques to manage, design and leverage the big data issues for a more in-depth understanding of your business. Also the agile development processes will be detailed showing how to uncovered complex analytics requirements and other issues early in the development cycle. This presentation will help you understand all these experiences that took processes from 37 hours to seconds so you can create a successful big data design and scalable data warehouse analytic architecture.

Java DB2 Developer Performance Best Practices

Can your Java, J2EE DB2 application sustain a large number of client requests simultaneously? Or do they deadlock, become sluggish, or have painfully slow response times? There are many reasons for java performance bottlenecks and many ways to prevent them. However, sometimes it’s just a matter of following some simple best practices that can make all the difference. This presentation will discuss the java developer best practices, coding for optimum DB2 access and some simple changes you can make, some in the design and some in the coding phases that can help your developers build faster, more robust applications.

DB2 User Groups:

Minneapolis: For more information click here

Milwaukee: For more information click here

Chicago: For more information click here

__________________________________________________________

Dave Beulke is an internationally recognized DB2 consultant, DB2 trainer and education instructor. Dave helps his clients improve their strategic direction, dramatically improve DB2 performance and reduce their CPU demand saving millions in their systems, databases and application areas within their mainframe, UNIX and Windows environments.

Hi David,

Is Adaptive compression good for a database with alot of updates ?

thanks

H

Yes Adaptive Compression and compression in general can make a huge difference in performance by referencing more data with every Db2 I/O. It can also cache more data within the buffer pools. Adaptive Compression provides even more customized compression and optimizes I/O and buffer pool efficiencies.

As long as the data Update processes are updating data in the data row of the same size the adaptive compression will do a good job compressing your data and putting the data back into the same data page slot. Beware of Adaptive or any compression if the Update processes are enlarging the data row length significantly.

Hope this helps

Dave